Radar vs. LiDAR: Unpacking the All-Weather Sensing Advantage

TL;DR

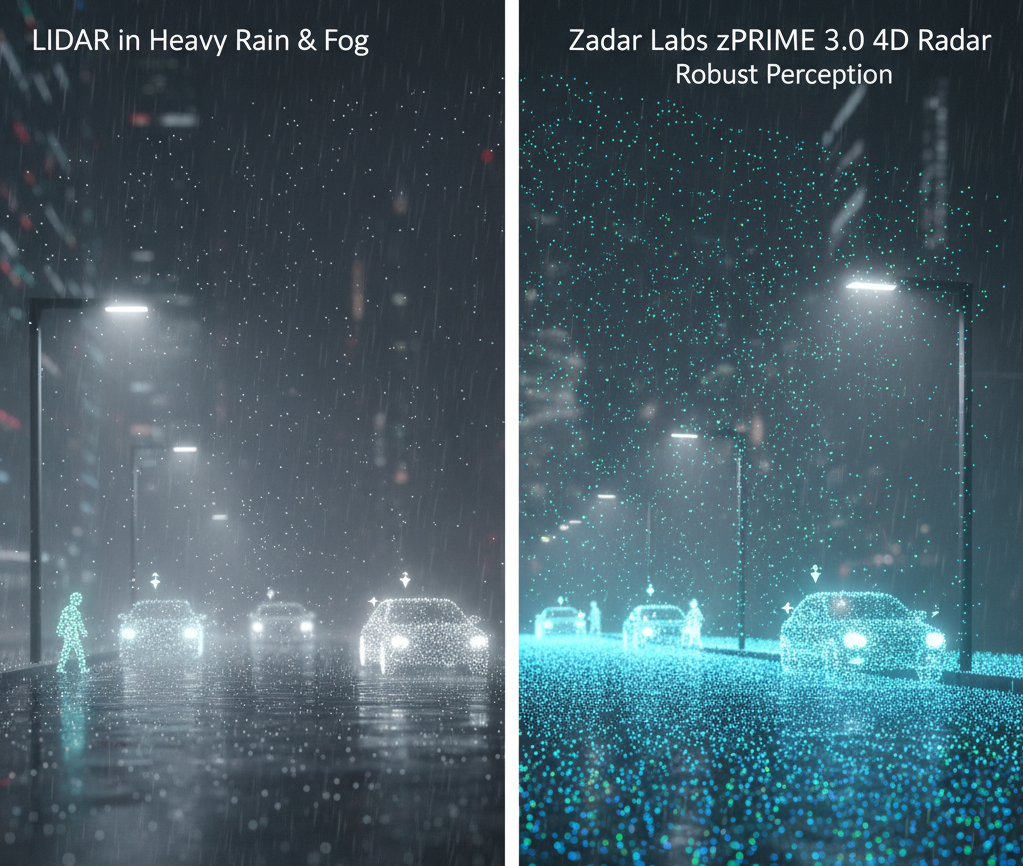

4D imaging radar maintains full sensing capability in weather conditions that severely degrade LiDAR. For mission-critical autonomous systems, this weather resilience is essential for real-world deployment.

The Visibility Problem in Autonomous Systems

Autonomous vehicles, security systems, and industrial robots all share a critical dependency: reliable environmental perception. Yet the sensors they rely on often fail precisely when visibility matters most—in fog, heavy rain, dust storms, or low-light conditions.

LiDAR has dominated the perception stack for years, but its fundamental physics create an unavoidable weakness: light scatters in adverse weather. When water droplets, dust, or snow fill the air, LiDAR range decreases dramatically, and point cloud quality degrades to unusable levels.

How 4D Imaging Radar Changes the Equation

Unlike optical sensors, radar operates at wavelengths that penetrate atmospheric obscurants. Modern 4D imaging radar adds a critical fourth dimension—elevation data—to traditional range, velocity, and azimuth measurements, enabling high-resolution 3D environmental mapping with instantaneous velocity for every detected point.

Key Advantages of 4D Imaging Radar

All-Weather Operation: Maintains performance in fog, rain, snow, and dust that degrade or disable optical sensors

Instantaneous Velocity: Every point includes velocity data, enabling immediate classification of moving vs. static objects

Long-Range Detection: Effective beyond 300 meters while maintaining angular resolution

Cost Efficiency: Software-defined architecture enables continuous improvement without hardware replacement

Real-World Performance Comparison

Testing in simulated fog conditions (visibility under 50 meters) demonstrates the stark contrast:

LiDAR effective range: reduced by 60-80%

4D imaging radar effective range: maintained at 95%+ baseline

For systems deployed in mining, agriculture, port operations, or security applications, this difference is not theoretical—it defines whether operations continue or halt when conditions deteriorate.

The Path Forward: Sensor Fusion with Radar at the Core

The most robust perception architectures now position 4D imaging radar as the primary reliability layer, with cameras and LiDAR providing complementary data when conditions permit. This approach ensures graceful degradation rather than catastrophic failure.

As autonomous systems move from controlled environments to real-world deployment, weather resilience transitions from "nice to have" to "mission critical." The physics of radar make it uniquely suited to deliver reliable perception when it matters most.